Section: New Results

Pervasive support for Smart Homes

Participants : Minh Tuan Ho, Michele Dominici, Bastien Pietropaoli, Frédéric Weis [contact] .

Pervasive computing involves tight links between real world activities and computing process. While the perception of the real world events can be handled entirely by the application, we think that ad hoc approaches have limitations, in particular the complexity and the difficulty to re-use the code between applications. Instead, we promote the use of system level abstraction that leverage on tangible structures and processes. Important properties of this approach is that applications are, by design, operating in an implicit way ("in the background" of physical processes). They also often exhibits simpler architectures, and "natural" scalability in the sense that being build upon existing real-world process, they are strongly distributed design that relies essentially on local interactions between physical entities. We are applying this approach to "Smart Homes". A Smart Home is a residence equipped with computing and information technology devices conceived to collaborate in order to anticipate and respond to the needs of the occupants, working to promote their comfort, convenience, security and entertainment while preserving their natural interaction with the environment.

Definition of a system architecture

In a classical "logical" approach, all the intelligence of the Smart Home is condensed in a single entity that takes care of every device in the house. The sensors distributed in the environment have to send back all the gathered data to the central entity, that takes all the burden of parsing the sensitive information and infer the policies to be implemented. Our architecture is instead focused on a physical approach, where every device carries a part of the global intelligence: every single entity can analyze the part of information sensitive for its goal, derive useful data, and communicate meaningful information to the other devices.

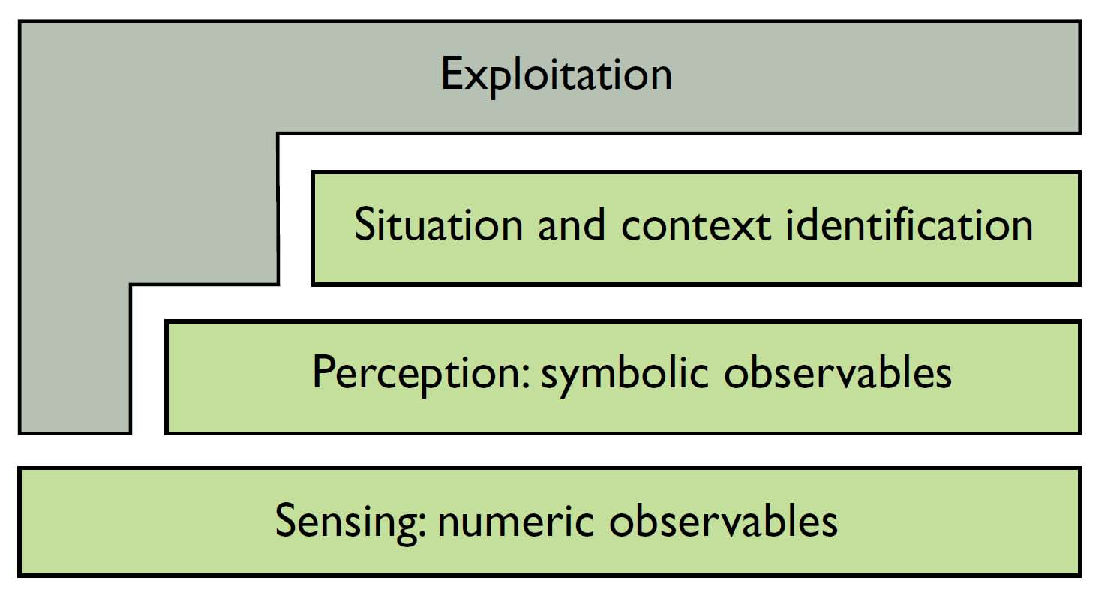

Our work is based on a four-layer model [8] , as showed in Figure 3 . The first layer of our system should be simply composed of sensors, but some constraints have to be fitted. In order to reduce the global system cost and to protect the inhabitants' privacy, the number of sensors dispatched in the environment has to be reduced as much as possible. However, a huge number of different sensors are required to sense context pieces and redundancy can significantly increase the reliability of the sources. With this idea in mind, the sensors are grouped in nodes. These nodes are able to preprocess the data with simple computation such as minimum, maximum and average. They also enable the sensors to communicate, using, for instance, 6LowPAN (IPv6 over LoW Power wireless Area Networks).

In the second layer, the raw data are processed to obtain more abstract data about context and occurring situations. It could be, for instance, a presence in a room, the number of people in this room or the posture of someone. The aggregation of raw data is realized thanks to a data fusion algorithm. The one we adopted is called the belief functions theory or theory of evidence [6] .

The bridge between the second and the third layer is realized integrating the results of sensor data fusion into a context model called Context Spaces. This model uses geometrical metaphors to describe context and situations, relying on the following concepts: the context attributes, the application space, the situation spaces and the context state. The context attributes are information types that are relevant and obtainable by the system; in our case, the context attribute values are provided by the perception layer, together with a degree of confidence on them, needed to cope with the intrinsic uncertainty of sensing systems in real world scenarios. In the situation and context identification layer, the context state provided by the perception layer is analyzed to infer the ongoing situation spaces (representing real-life situations) and also produce a measure of confidence in their occurrence. As the same context state can correspond to several different situation spaces (and vice versa), reasoning techniques are needed to discern the actual ongoing real-life situations in spite of uncertainty [5] .

Experimentation

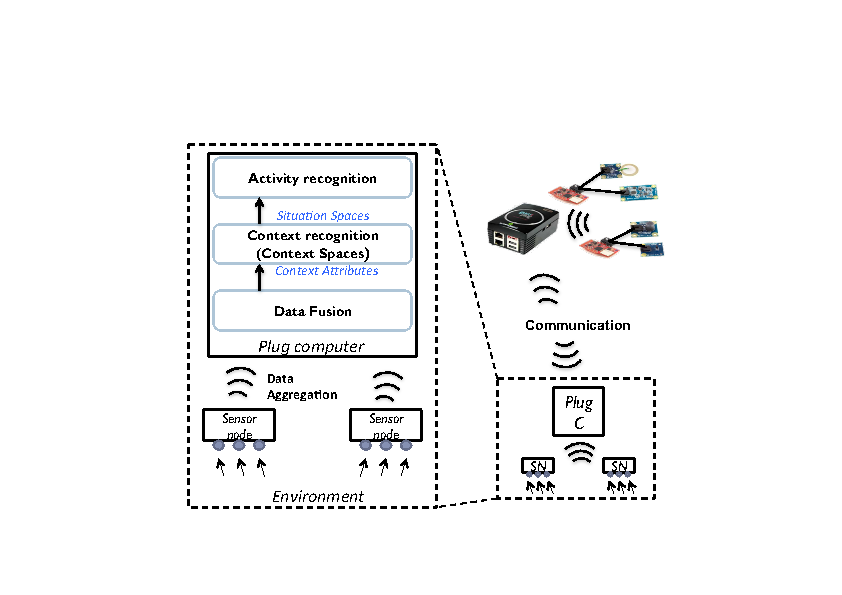

The computations required by the second and the third layers to obtain abstract data and to analyze context and situations are too heavy for our nodes to be processed on. To remedy to this problem, more powerful nodes acting like sinks are used. These nodes are small "plug and play" computers called plug computers. Their role is to gather data from sensor nodes and to perform data fusion, required to produce the context attributes, and context space reasoning, used to identify ongoing situations.

The figure 4 gives an overview of our system architecture. The latter has been demonstrated by ACES team at EDF R&D in November.